Govern AI with Rules, Not Values

A Vision of Specification-Driven AI

(brief content note: our focus has been more on engineering and planning than communication this quarter, but I’m excited to share this argument for a more positive version of AI governance. And more writing to come soon!

Also, if you like this, you might be interested in joining a pop-up on this topic I’m leading, hosted by Project Liberty - register here)

“it is indispensable that they should be bound down by strict rules and precedents, which serve to define and point out their duty in every particular case that comes before them”

Alexander Hamilton, Federalist 78, describing the judiciary, to become arbiters of the law

1. The case for specification-driven AI

If we continue treating AIs as human, we will yield our humanity to them. I’m claiming that specification-driven AI is a paradigm in which humans can translate our notions of norms and morality so that human-level AI systems can be required to respect human autonomy and negotiate concepts like morality as peers.

Consider this example:

If you hired a contractor for a kitchen renovation, you wouldn't share your life philosophy and aesthetic values in hopes that the contractor intuits what kind of cabinets you want. Instead, you’d provide detailed specifications: measurements, materials, deadlines, and acceptance criteria. Perhaps you'd work with a designer first, who specializes in developing these specifications. Importantly, the contractor must also adhere to building codes and safety regulations — external specifications that constrain what can be built regardless of client preferences. The contractor then delivers precisely what was asked for and provides evidence they've met the requirements.

This contractor relationship is fundamentally different from how we form collaborative relationships with other people. With employees, we train them, imbue them with company values, surround them with company culture, and guide them toward a company mission and goals. Then we grant them increasing amounts of autonomy in pursuit of our shared objectives. With children, we guide them and teach them through examples; we pass on values through principles like “treat others as you would like to be treated,” as well as providing copious feedback; and we give them increasing amounts of independence and autonomy.

I claim that effectively all today’s concerns about AI come from the fact that we are (perhaps unintentionally) trying to slot AI systems into the “human” category of the social fabric when we should be treating them as non-human entities, similar to corporations. Trust leads to anthropomorphizing AI systems which creates issues because they’re fundamentally not human. Alternatively, adopting a contractor-like framework could both dramatically reduce the likelihood of failure modes from unsafe AI and facilitate faster, more effective adoption of AI capabilities. This distinction is not merely academic. As AI capabilities expand, the way we instruct and govern these systems will fundamentally shape their impact on society.

In the remainder of this essay, I’ll explain…

Why you should be skeptical of the current strategies to “align” and improve AI,

What we can do instead: scale human review through formalization of policies,

What challenges must be overcome to adopt this paradigm, and

What the future of this approach looks like.

2. Values-based alignment is not democratic

Companies and research labs developing frontier large language models claim that the solution to misbehaving AI is “alignment,” in which the researchers more accurately and effectively embed norms and values into the AI systems themselves. However, AI researchers surveyed widely consider the question “How can one align an AI?” to be among the most important unsolved research questions in the field of AI.

An aligned AI would have internalized human values and preferences, allowing them to "do what humans want," or perhaps a specific as “do what the user wants” across diverse contexts. This goal may seem intuitive, but even if alignment were solved tomorrow, there are still challenges about what to align it to:

Your preferences vary over time: Your values today might not match your values tomorrow, next year, or five years from now. Which version of yourself should an AI align with? An AI system that defers to your future values would be deemed paternalistic, yet we (rightfully) criticize recommendation engines for exploiting our fleeting desires as they maximize engagement. Furthermore, I expect that confidence in an ostensibly aligned AI system would be further eroded if I knew it wasn’t actually aligned to me, but rather to a frontier lab’s approximation of me.

You (provably) can’t please all the people all the time: When multiple people use the same AI system, whose values take precedence? Arrow's impossibility theorem shows that it is provably mathematically impossible to combine preferences (e.g. in a vote) and preserve all of some basic, intuitive fairness properties. Even going from a group of n people with opinions on some options to saying “the group has opinions on the options” requires that you discard all but 1/n of the information! (What mechanism you use, whether it be rank-choice voting, quadratic voting, or markets, should be thought of as simply a choice of which information you’re disregarding.) By comparison, alignment seems to assume we can convince an AI system to act morally; how long after a company claims to have an AI that acts morally, will they (or others) begin to point to the AI’s actions as not just an example of, but rather the paragon of morality?

Alignment enhances cultural conflicts: If the alignment problem were solved today, AI systems become vectors for ideologies. America, China, and other countries are all struggling to ensure their values are embedded in AI systems. This concentrates extraordinary power in the hands of those who define these values, and increases geopolitical instability as countries attempt to ensure that any possible superintelligence might be their culture’s ideological successor. Furthermore, preferences evolve over time, creating a potential dilemma: either we allow future generations to update the values of long-lived AI systems (undermining the strength of alignment today), or we risk a future where humans are governed by superintelligent entities enforcing centuries-old value systems that no longer reflect contemporary moral understanding.

The interpretation problem: Values are inherently ambiguous and context-dependent. Consider a simple instruction to an AI image generator to create "historically accurate" images. Should it:

Replicate biased representation from historical training data?

Correct for historical bias while maintaining period authenticity?

Optimize for some middle ground between accuracy and contemporary values?

Moral philosophy points to the fundamental nature of the “is/ought chasm” (a.k.a. Hume’s Guillotine): you cannot, through logic alone, conclude a statement about what the world should be solely from statements about how the world is. Therefore, to reach an objectively correct answer about what an AI system should do requires that we start from some implicit or explicit premise about what “good” is.

These fundamental limitations (temporal inconsistency of individual preferences, impossibility of lossless preference aggregation, ideological amplification, and inherent ambiguity of values) reveal why the alignment paradigm faces both technical and philosophical obstacles. Rather than planning for AI system to internalize and interpret human values, we need an approach that establishes clear boundaries, enables objective verification, and preserves human agency in determining acceptable AI behaviors. Specification-driven AI offers precisely this alternative path.

3. What is specification-driven AI

Rather than embedding values into an AI to improve alignment, specification-driven (or spec-driven) AI is a family of approaches in which a user generates a formal specification — criteria expressed precisely and unambiguously so they can be automatically verified — and the AI’s output is verified against that specification, ideally with formal logic or mathematical proofs.

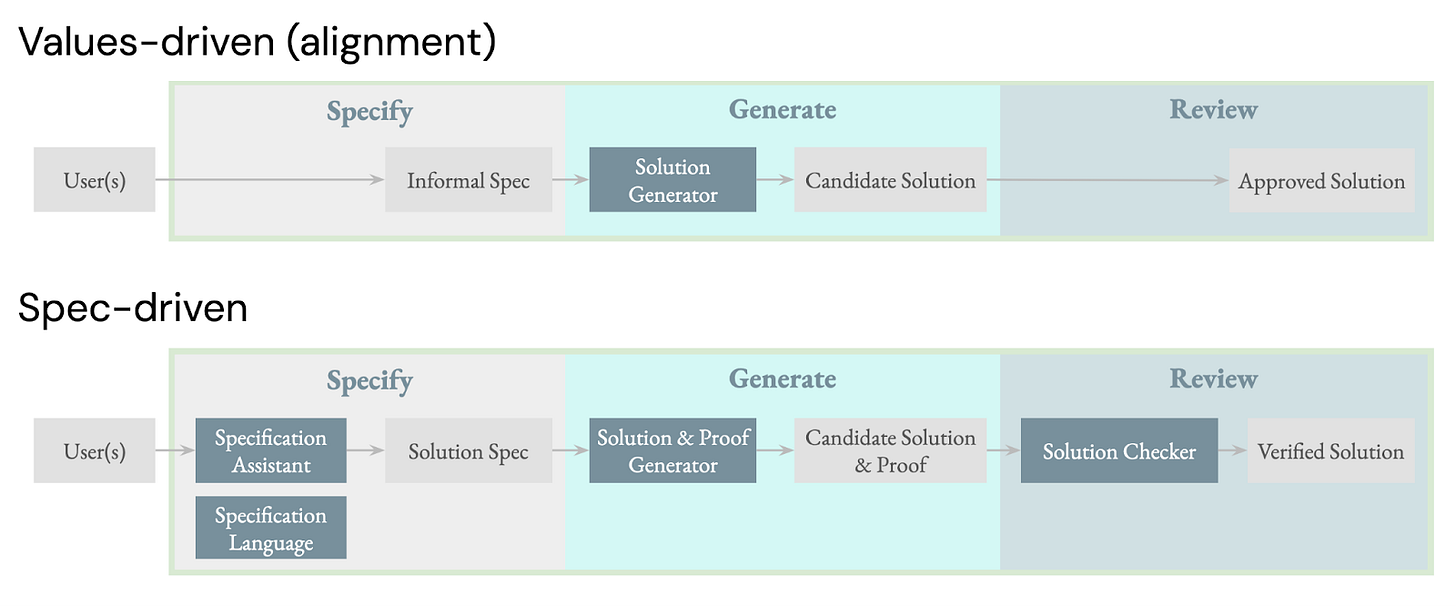

The following figure below illustrates how people currently use AI systems and compares it to a spec-driven AI workflow, which has the following steps:

First, the user first generates a human-reviewable, formal specification (the “Solution Spec” in the figure below). While the definition of a formal spec will be provided in the next subsection, consider it to be a list of all the objective properties that a solution should have. This is likely done with the help of an AI tool, but importantly, the spec can be reviewed by and explained to the human.

Once the spec is approved, a different AI system (the “Solution & Proof Generator” automatically generates a solution in addition to a mathematical proof that the solution satisfies the spec.

The proof-verification process is then a fully automated, trustless step that can be performed by a small, generalized, non-AI program.

It's worth noting that although there are multiple approaches that fit under this umbrella, the term spec-driven AI is not a common term (yet). The proposed Guaranteed-Safe AI framework and Safeguarded AI architecture would qualify, as would plans to use AI to generate formally verified software. However, strategies like Constitutional AI do not fall into the category of spec-driven AI because, in those systems, the constitution is subjective and must be interpreted by AI systems which must be trusted and the goal spectrum and AI are objective requirements that do not require trust. It also seems worth noting that spec-driven AI is a type of AI control, wherein the model is assumed to be either fallible or untrustworthy.

What is a formal specification

Formalization is the process of converting ambiguous natural language policies into precise, machine-checkable specifications. A formal spec must take inputs that are anchored in observations or measurements of the world, but can then reason about properties those inputs must have with mathematics and formal logic. (Going slightly deeper, formal logic is the process of generating conclusions from premises using axioms like “A implies B & B implies C ⇒ A implies C”.)

While it is challenging to formally specify all the relevant properties of a solution, there is already adoption or progress developing formalization techniques across various domains:

Software Verification: Formal verification is a branch of computer science in which desired properties of programs are formally specified and the software is proven against those properties. The notion of proving software properties dates back to early proposals by Alan Turing, and techniques already provide mathematical guarantees about critical software properties, including flight systems software on aircraft, train scheduling software, cryptography and computer networking libraries, and even an operating system microkernel. In most instances, adoption is limited by expertise in formal verification. However, AI could democratize these approaches, allowing non-specialists to express desired software behaviors in natural language and receive formally verified implementations.

As a separate example, the Rust programming language is growing in popularity because it enforces a property of memory safety on all programs, which could be considered a form of formal verification.Tax codes are written in natural language, but tax software run by governments are mechanized formalizations (i.e. runnable as software). However, this formalization is not typically generated with input from the original lawmakers. There are efforts to formalize the tax code into a public, formal source of truth around tax liability, enabling anyone to understand regulatory implications, and deploy autonomous AI agents to operate with confidence.

HR policies can describe objective criteria for hiring, promotion, layoffs or other workplace changes. However, these policies may be inconsistent (i.e. containing conflicting statements) or contain undefined behavior (don’t fully explain what to do in all situations). The head of the largest formal verification team in the world has spoken about the work they’ve done formalizing HR policies, and the limit on who can benefit from this formalization seems largely limited by the cost of the expertise needed to formalize policies.

Hopefully it’s intuitive how formalization could apply to other domains as well:

Building codes specify measurable properties like minimum number of exits, number of electrical outlets, sizes of windows, and structural requirements. Imagine an architect submitting building plans online with all metadata needed to automatically verify compliance with building codes. Instead of waiting weeks for manual review, they could submit their plan with evidence and proof that the plans meet all requirements. This could enable near-instant feedback and approval of compliant plans, in exchange for extra work on the architect’s side generating computational arguments for compliance. This also should enable faster iteration because the formalized policies are transparent and as complete as possible without requiring human interpretation.

Information trustworthiness: as consumers of news and other types of information, we could state what criteria we considered necessary or sufficient to accept information, and parse information into an easily-interpretable set of assumptions, arguments, and conclusions. For instance, users could specify that they do not trust specific sources unless claims are based on primary sources. Especially if combined with tools to track provenance of information, this could enable dramatically better epistemics for the general population.

Biological Specifications: A computational toxicity forecasting competition could create standardized ways to quantify chemical hazards, enabling AI systems to screen potential compounds for safety concerns before synthesis. This specification language would then allow regulatory bodies to formally specify safety requirements that must be satisfied before proceeding with novel chemical development.

Scaling Human Review; avoiding a review crisis

It might seem that I’m simply advocating for more automation of regulatory review that would benefit all humans. While I think this automation would be a dramatic improvement in today’s world of humans regulating human actions, I believe it is critical for humans reviewing AI actions, because human review doesn’t scale once we’ve deployed human-level AI agents.

Consider it in these terms: Today people (a) decide what to do, (b) decide how to do the thing, (c) do the thing, and then (d) review the outcome. As AI systems become increasingly capable, they are currently on track to take over (c), then (b), then (a), while humans are left reviewing. Humans generate fewer than 200 tokens per minute. One million tokens from GPT4.5 costs roughly $150, which is the highest cost for any available model. If costs don’t change significantly, that means a human-level AI system will be able to generate outputs at the equivalent of a human for $3,600 per working year. Since, humans cannot hand-off responsibility to an AI system, so once AI systems become roughly as capable as humans, human reviewers must either become a bottleneck on progress, abdicate review, or find a way to automate the review process. The only other alternative would be expecting all humans to be employed as reviewers of AI outputs, evaluating for compliance.

Formalization can automate this process safely and efficiently. I believe we should build toward a world where humans decide what should (and shouldn’t) be done, and AI systems have to prove their actions against these specifications.

One relevant benefit of this architecture: Specifications compose better than values: If two groups of people reach a fundamental conflict about actions being morally good or wrong (e.g. debates around equal treatment vs religious expression), there’s no clear way to rectify this conflict from the perspective of AI alignment. However, governments have processes to determine what is illegal, and we could imagine each of the above groups using the legal apparatus to set policies defining a formal boundary between legal and illegal that is a compromise in encoding good vs wrong.

In control theory, this could be mapped onto a constrained optimization problem. Training an autoregressive transformer intends to minimize the difference between the reward function and some notion of morality to be optimized. However, specs and rules set constraints. It's hard to robustly combine preferences in a way that my preference can't be cancelled out by your anti-preferences. But constraints stack nicely, similar to international treaties, national laws, and local laws: if something is prohibited at only the state or national level, then it's illegal regardless of the level at which it's illegal.

Additional benefits of spec-driven AI:

The paradigm can be adopted incrementally: Formalization doesn't require an all-or-nothing approach. Organizations or governments can first formalize a subset of their policies, starting with domains most amenable to formalization, then provide a "fast lane" of review for proposals that demonstrably comply with the formalized policies. As these interfaces become increasingly common and adopted amongst companies using AI agents, organizations can gradually expand the scope of formalized policies as they gain confidence in the approach.

Specifications enable black-box trust: One intrinsic risk from this is that embedding values and ideals into a machine makes that machine a vector for your ideologies. This means that anyone with a competing ideology will see yours as an existential threat. However, if your machine is bound by formalized requirements, you should be comfortable using anyone else's system because that output will also have to meet the requirements; any dissatisfaction you have with the outcome could be mapped, purely to an error or omission in the specification.

We have the social institutions and mechanisms to set rules: No society on earth is well-suited to curate a set of examples sufficient to convey its values. And even if it were, alignment is not transparent, and there’s an entire field of research based on the open question of interpreting the actions of AI systems. By comparison specifications are transparent, they can be debated, refined, and revised through democratic processes. All countries have mechanisms to set rules for citizens to follow, and democratic countries have mechanisms to ensure this is participatory. Rather than companies leaving liability to the users of their increasingly autonomous AI systems, or letting those systems be limited by alignment, we should have tools that first formalize the laws to ensure verifiable compliance, and eventually provide these tools to legislators and onboard governance processes so that we don’t risk AI systems that don’t follow laws.

4. Implementation Challenges and Solutions

I’m a staunch believer that to truly have confidence in the existence of a solution is to solve the problem. As this problem is not yet solved, there must therefore be details omitted and questions unanswered. This section is intended to address some of the bigger open questions that must be answered in addition to the significant quantity of engineering that will be needed to make this approach successful.

Q: What is the likelihood spec-driven AI succeeds as a paradigm?

A: Enough that I think it's worth doing, but success is far from guaranteed. I'm not pursuing this because I think it's easily tractable, but because it feels so needed and potentially valuable if successful.

Q: Previous attempts to create formal specifications have been prohibitively expensive. How is this approach different?

A: Prior formal verification efforts like the seL4 microkernel required approximately 20 person-years to generate 10,000 lines of verified code—roughly 200 hours per line of code. At this rate, formalization is only practical for the most security critical applications. However, today's language models have shown promising capabilities on formal reasoning benchmarks with multiple models scoring above 80% on the MATH benchmark of word problems and translate between specification languages. While previously only specialists could write formal specifications, AI assistants are increasingly enabling non-specialists to participate in the formalization process, opening this approach to widespread adoption.

Q: Haven’t there been attempts to create robust ontologies for formalizing?

A: The Internet's early days saw numerous attempts at creating knowledge databases and ontologies, most of which failed because people needed to learn complex schemas in order to use them. The critical difference today is that AI can learn the rules, syntax, and terms for a formal specification language, and handle the translation between natural language and formal specifications, removing this adoption barrier. There are even systems like Logical English which was constructed to be easy to read and understand as an English speaker with no formal logic experience; writing in this system is challenging, but having a formal spec in logical english that could be compiled to a more succinct and mathematical logic (by a formally verified compiler) would enable a user incredibly high leverage without having to learn to write in a new ontology.

Q: Proving things about software is challenging enough, but how can you claim to prove things about the real world?

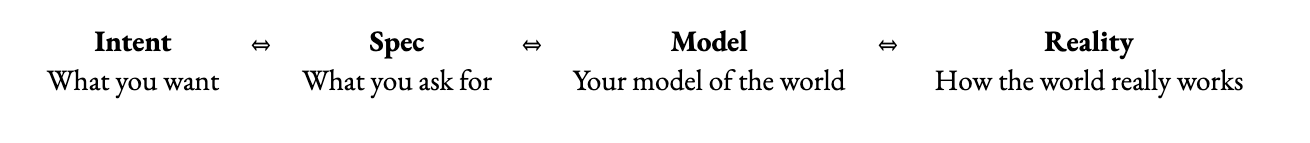

A: Formal verification provides mathematical guarantees about certain properties, but those guarantees are only as good as the specifications and world models they're based on. To understand the power and limitations of specification-driven AI, I think about four components in the verification workflow:

Only the spec and the model can be written down and examined. A model ⇔ reality gap will grow because our knowledge of the universe is incomplete and our ability to express all relevant aspects of the world is limited. The best mitigation is to build better tools for people to contribute collaborative improvements to the world model, which is an active area of research and investment.

Specification-based approaches can mathematically prove there's no spec ⇔ model gap, ensuring that what gets built actually matches what was specified. This is powerful because it eliminates an entire class of implementation errors.

The intent ⇔ spec gap represents the challenge of translating what you want into a formal specification.For example, a self-driving car might have a specification to "maintain safe distance from obstacles" that fails to account for momentum or slippery roads. One mitigation is to find simpler properties that are easier to formalize and/or prove (e.g. the car shouldn’t drive over a certain speed if ice appears to be present). Another is to provide tools to help users reason about the spec and its implications in the world model, asking questions and understanding design implications.Our approach provides tools to explore edge cases and test specifications against diverse scenarios before implementation.

Rather than claiming to eliminate all gaps between intent and reality, specification-driven AI gives us concrete ways to narrow these gaps systematically while providing mathematical guarantees for the parts that can be formalized.

5. Progress and next-steps for spec-driven AI

Several tools and initiatives are emerging to enable a transition from an alignment-based paradigm to a specification-driven paradigm. This section outlines the current landscape and future directions.

The main components for formal verification are specifications, solutions, and proofs that the solutions satisfy the specs. To deploy these systems widely we need progress making sure we have good specs and good proofs, as well as putting the whole thing together:

Tools for specs

Specification validation:

To ensure specifications match intent, we need spec validation tools can generate test cases, counterexamples, and natural language explanations of formal specifications. These help humans understand the consequences of their specifications before implementation. My nonprofit, Atlas Computing, is building a specification IDE that uses an LLM to help users understand and improve the mapping between a natural language specification and a formal spec, closing the aforementioned intent ⇔ spec gap.

The goal is essentially to load the relevant components of the specification into a person's brain so they can understand if that's actually what they want. This approach is more robust than externalizing only a subset of requirements and hoping the implementation system correctly interprets them.

AI-Powered Translation:

Large language models can translate between natural language statements and formal specifications, making formalization accessible to non-specialists. This removes the primary barrier that prevented earlier ontology projects from succeeding. You no longer need to learn complex schemas—AI can handle that translation for you.

Tools for proof generation

Verification systems

Proof verification systems like Coq, and Isabelle have been under development for decades (recently joined by Lean as a rising star) and will serve as infrastructure for proof generation. These systems provide the mathematical foundation for verifying that implementations meet specifications. While historically requiring specialized expertise, improved interfaces and language model integrations are making these tools more accessible.

The growing interest in formal verification has also led to a renewed interest in investing in formal verification tools and projects. This includes new proof languages, proof libraries, and proof verification systems. The world's largest formal logic team is the Automated Reasoning Group (ARG) at Amazon Web Services; this team includes Leonardo de Moura, who is also leading a Focused Research Organization with significant funding for 5 years to improve the Lean proof assistant as a foundational tool in formal verification. The Lean FRO (Lean Focused Research Organization) has received approximately $50 million in funding to advance the Lean theorem prover and make formal mathematics more accessible.

AI-based Solution and Proof Generation

Several organizations are developing systems to automate the generation of mathematical proofs:

OpenAI and Epoch AI: OpenAI has funded Epoch AI to collaborate on the Frontier Math benchmark, hoping to improve the mathematical capabilities of language models. Their research has shown promising results in applying language models to formal mathematics.

AlphaGeometry2: In February of this year, DeepMind's AlphaGeometry2 demonstrated the ability to perform at gold-medal level on International Mathematics Olympiad questions. AlphaProof, another DeepMind project, is built using Lean.

Startups: Harmonic has raised $18 million to build tools for formal verification and mathematical reasoning, with the goal of enhancing AI safety through provable guarantees. Similarly, Morpheus (founded by OpenAI alumni) raised $20 million to develop systems that can generate and verify mathematical proofs.

DARPA: DARPA has run multiple programs over the past decade to improve tools, methods, and practices around formal verification and continues their support of the field, due to the promise it shows for enhancing national security. Their Automated Rapid Certification Of Software (ARCOS) program aims to develop tools for automated formal verification of mission-critical software while their Pipelined Reasoning of Verifiers Enabling Robust Systems (PROVERS) program aims to facilitate proof repair.

Spec-driven AI coordination

I’m also co-organizing the most recent in a series of workshops with the authors of the Guaranteed-Safe AI paper, which includes Yoshua Bengio (Turing Award winner), David “davidad” Dalrymple (who leads the £59M Safeguarded AI research program at ARIA) and multiple other highly regarded professors in the fields of AI and formal verification. The goal of these workshops is to coordinate with various researchers, funders, and organization builders in order to identify gaps and help drive adoption of specification-based AI as a widely useful and safer system. These efforts aim to identify high-priority domains for initial application of specification-driven approaches and develop roadmaps for necessary technical advancements.

A Path Forward

As AI systems become more powerful, the question of how to govern them becomes increasingly urgent. The specification-driven paradigm offers a practical, democratic alternative to values-based alignment approaches. By treating AI systems more like contractors than employees—providing clear specifications rather than hoping they internalize our values—we can build systems that reliably do what we want because we can verify it.

This approach requires advances in formal methods, natural language understanding, and human-computer interaction. It also demands a shift in mindset from both AI developers and policymakers. Rather than building increasingly autonomous systems and hoping they share our values, we should build increasingly verifiable systems that demonstrably follow our rules.

We’re seeking collaborators:

Please reach out if:

You work in research and are interested in developing any of the aforementioned tools

You work in policy and want to explore expressing regulations as formal specifications

You work in industry and you want to try out verification technologies to more safely leverage AI advancements

You want to know more about how to demand transparency and verifiability from AI systems that impact your life

The future of AI doesn't have to be a choice between stagnation and uncontrolled autonomous AI systems. With specification-driven approaches, we can harness AI's transformative potential while maintaining meaningful human oversight. The time to build this future is now, before powerful AI systems become too entrenched in the alignment-based paradigm to change course.