A refinement-based paradigm for code generation

Consider this to be an extended answer to the question “why would you build the IDE for specification” described in our previous blogpost. The questions in this document are a modified+truncated Heilmeier Catechism that were part of a (rejected) proposal, but I wanted to share it as a public artifact.

“How is it done today, and what are the limits of current practice?” What’s the default trajectory + why is that not ideal?

All strategies for reducing AI risk follow the same limited paradigm: some combination of evaluations and benchmarks (measuring how capable or risky the AI systems are), red-teaming (trying to get AI systems to do the right thing), and “alignment”. Alignment is a vague notion that you’re encoding your values into the AI system itself, so that you don’t have to review its behavior.

Relying on this paradigm is fundamentally risky for many reasons, the first of which is that you have no reason to believe that the system is aligned with your goals, or that alignment is even possible. Additionally, you shouldn’t believe these practices can catch all possible failure modes. Lastly, alignment implies researchers are getting systems to behave well by indoctrinating them with cultural preferences/norms/values, which means that AI systems become vectors for ideologies.

Atlas Computing wants to build and deploy an alternative paradigm. Instead of handing off decision-making to AI systems and hoping that the AI systems will behave as the user would like, we propose building tools that set rules for AI systems that they prove they’re following. This is very similar to the research directions proposed by Davidad, Yoshua Bengio, Stuart Russell, Max Tegmark, and others. But rather than a pure research effort, we want to build and deploy prototypes of systems to make human review possible at scale. The natural place to start doing this is with a technologically adept and forward-looking government. We hope this will help establish Singapore as an ambitious and pragmatic international partner on AI innovation and governance within raising security and resilience baselines for AI and upskilling the workforce through sector specific AI training programs.

Not all areas of AI use are equally risky - we intend to start with the systems where we believe that our paradigm of specification-based AI will show the strongest benefits, namely AI systems generating software and the structuring of natural language responses. This proposal comprises 3 parts:

The remainder of part 1 describes the overarching vision of specification-based AI

Part 2 describes our first development direction: developing and validating formal specifications of software on the path to specification-driven AI generation of software

Part 3 describes a useful tool to use specifications to improve the quality of responses from large language models (LLMs) to reduce hallucinations and improve communication quality and clarity.

We’re proposing pursuing the work in Part 2 and Part 3 simultaneously.

What are you trying to do? What is the vision? Articulate your objectives using absolutely no jargon.

From above: “we propose building tools that set rules for AI systems that they prove they’re following.” Let’s break down this description of the paradigm we’re proposing.

“rules for AI systems”:

Users of AI systems should be able to describe in very precise terms what properties AI outputs should have – this holds for various types of AI outputs, like software, pictures, audio, engineering designs, news articles, and legal opinions. For a concrete example, let’s consider an AI system that generates images for simplicity, though part 2 of this proposal will focus on AI-generated software. At present, it’s very challenging for genAI systems to generate images that have every named feature (especially text).

We need a language to set rules for different types of AI outputs. (Note that this isn’t unprecedented - we have legal terms for various domains of law.) Constitutional AI is an imprecise form of this where the specifications are written in plain English (natural language) where a different language model plays the role of adjudicator. But the languages need to be very precise, so that we don’t have to abdicate review to an AI system. For our image-generating example, this language would likely include terms for image styles, as well as words that denote presence/absence/location/orientation of a feature.

This specification language does not need be able to describe every aspect of the AI generated output, but should be the medium through which user preferences and governance processes can control the content. For example, in an image, a US Supreme Court Justice once said that the threshold for an indecent image is “I know it when I see it”, and while we don’t argue for the elimination of subjective opinion or human evaluation, objective specifications could define guidelines or conservative boundaries to empower human review.

“That they prove they’re following”:

Once we have specified what we want from an AI output, the AI system should present the user with both the output and corresponding evidence or proof that the output follows the rules. This is analogous to compliance processes (wherein solutions are presented with evidence that the solution adheres to relevant requirements). However, in this case, the required properties and evidence should be able to be evaluated by computers so as not to increase the burden of review. One could imagine one day applying this to not just formal verification of software but simplifying other aspects of compliance, ranging from structural engineering to early-stage drug development (validated via simulation).

“tools that set rules”:

As these rules might end up looking like a programming language in their own right, it is important to build tools to make it easy for normal users to set these rules and understand the implications of these rules..

“we propose building tools”:

We believe that the best first step to convincing anyone this is a better workflow is to start by prototyping the tools.

The intuitive next question becomes “how would these tools work?”. We envision a world where AI systems empower you by helping you understand and manage the complexity of the world — not serving you so that you can abdicate control and responsibility.

Someone with minimal (or even no) technical background should be able to use AI to develop a new software application, design a structure, write a book, or generate a new work of art while deciding exactly how much attention to pay to any design decision.

Their tools should empower them to justify any decision to even an expert in that field.

They should be able to generate descriptions of software systems or any other AI-generated artifact at any level of specificity, and use AI tools to refine the specificity of those requirements until the system is sufficiently constrained, at which point AI tools generate the artifact and prove that it matches the user-generated specifications.

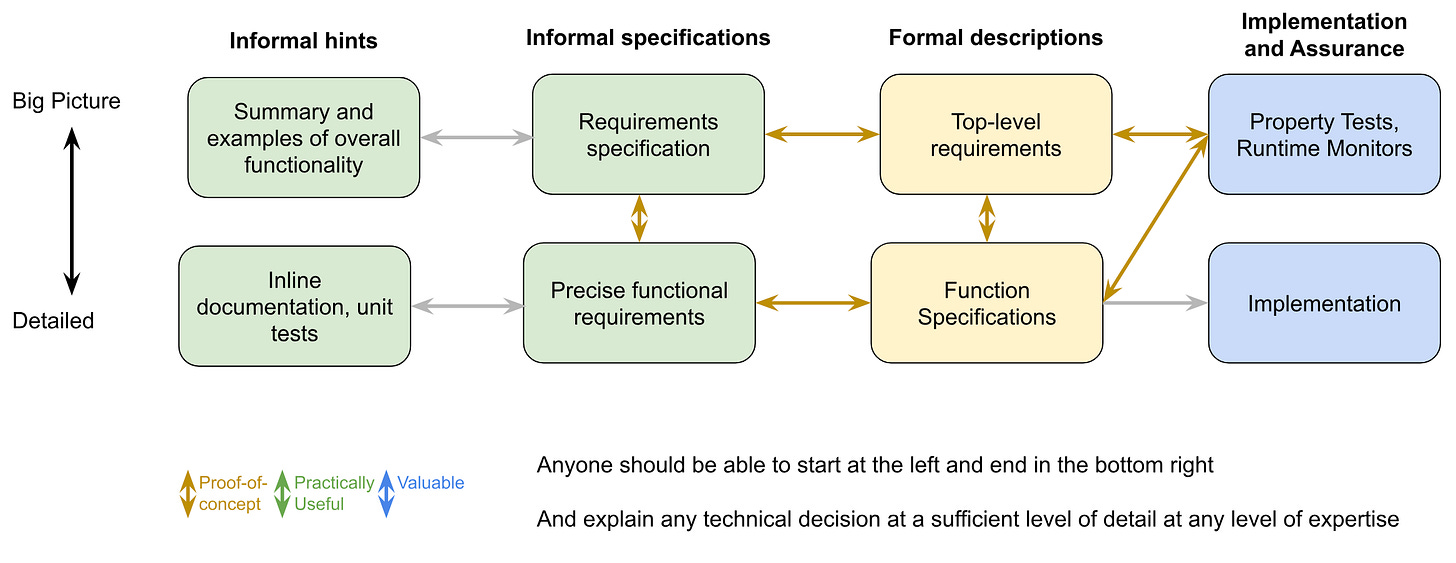

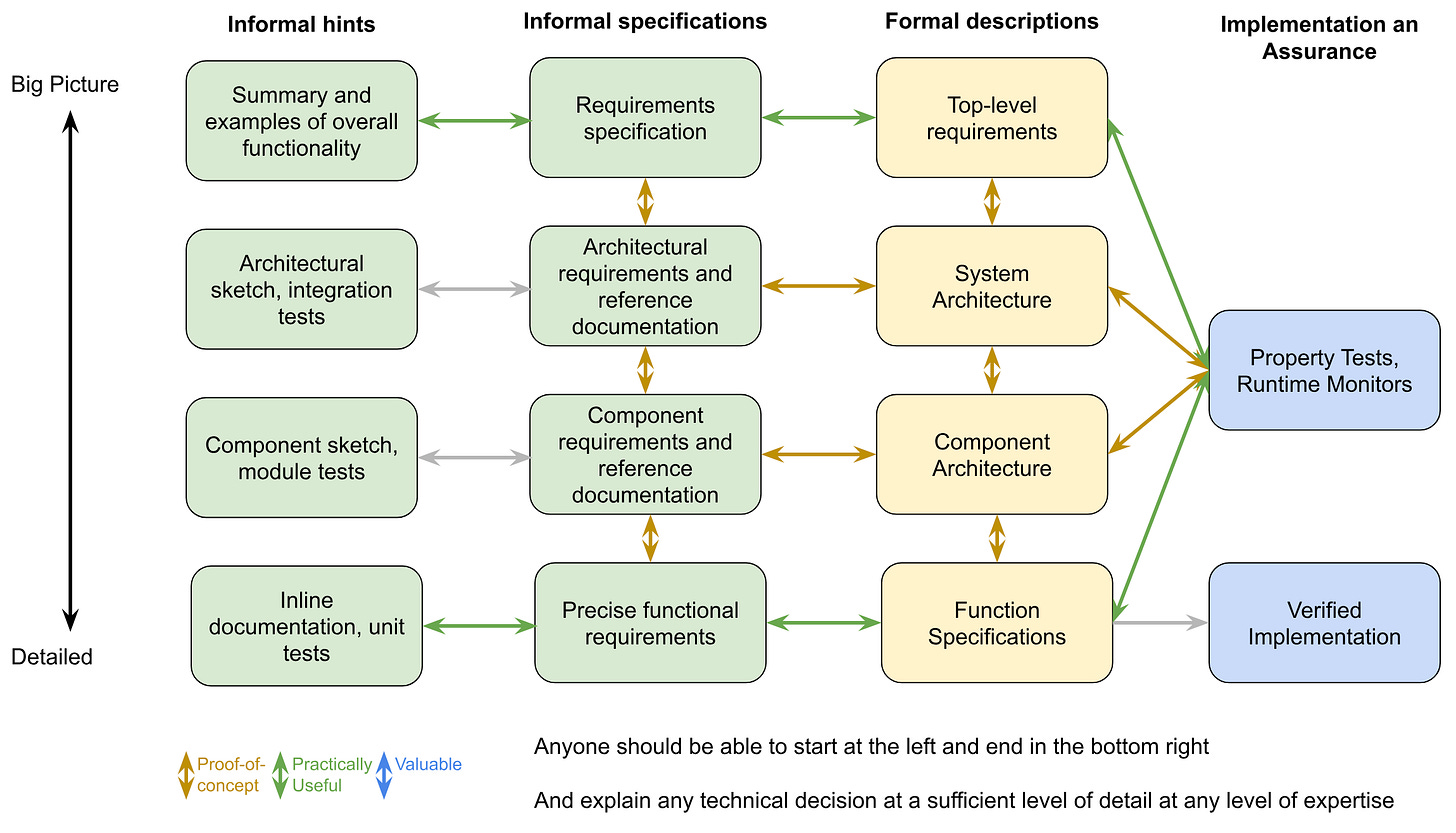

The following figure shows different forms of possible specifications when designing a complex system. We expect a user to start with an informal, big-picture sense of the desired solution (i.e. the left column) and use AI tooling to identify and make well-informed design decisions until tests and an implementation are reached.

Here, every grey arrow is a tool that helps you refine your model of what you want to build with the AI system. The tool should help you ensure consistency across the various levels of abstraction and formality, and could/will look like a our IDE with a pair of panels, as described in our previous post. (Here’s the video demo of the current status of our tool if you missed it.)

While this diagram could apply to multiple types of AI outputs, for the remainder of this proposal, examples will focus on AI systems generating software. We choose this as our first direction because formal languages for completely specifying the behavior of software already exist.

How can this be broken down into manageable steps?

This can be built incrementally by identifying the parts of the above diagram that are already labor-intensive actions currently done by hand, and that building better tools for those actions to enable step-by-step progress toward a comprehensive product. We propose the following roadmap as a rough order (where we’re building tools represented here as arrows, as they make conversions between artifacts).

Success would likely be measured by traditional usage metrics, like the number of active users, their reports on the effectiveness of the tool, and the tool’s prospects to impact a larger number of people.

Year 1

In the first year, we focus on small software systems that are composed of a small number of functions and develop tools to convert informal specifications to formal descriptions and property tests.

Year 2

In the second year, we start looking at larger systems and adapt our system to incorporate architectural requirements in addition to the fine-structure and high-level requirements.

Year 3

In the third (and last year of the proposal) we complete and polish most translation tools. Additionally, we prototype tools to generate verified implementations, though we expect significant progress will be made on this front by other efforts to advance AI-based code synthesis and AI for proof generation.

After the first year, we would also hope to deploy enough tooling into practice to showcase our implementations of specification-based AI.

By year 3, the tool should support each of the following engineering workflows:

New workflow: Start a new Mapped Project from scratch

Initialize workflow: Convert an existing project into a Mapped Project

Update workflow: Evolve a Mapped Project as part of development or maintenance work

In summary

We want to empower people who have an idea of what they want to build with an AI system to:

continuously refine their sense of what they want built,

make informed design decisions, prompted by AI systems, and

focus on requirements of *what* should be built, and be able to ignore how it’s built.

We think that looks like an IDE for specifying properties of software, so we’re building the platform.